AR Reading & taking notes

Oct 2018 - Now

UX Designer / UX Prototyper

Made with Unity 3D, Keynote

I created a new AR GUI Design Framework called "Surface"

And tested this framework in six different working scenarios.

Augment the physical world with the power of the digital part.

Give the physical attributes to the digital object.

Design Challenge

This classic working scenarios—reading while taking notes brought me two design challenges:

-

Context-based interaction design: remote control or direct touch. For example, multipage interactions

-

Typing in AR

For these two challenges, I proposed my solutions but whether they are good or not is still open to discussing.

The Story of AR Reading and Taking Notes

Features

-

Place your reading materials and your notebooks wherever you want

-

Highlight the sentences or words while reading

-

Zoom in or zoom out

-

Multiple pages open

-

Take notes by typing

-

Take notes by handwriting

User flow

Sit down in front of a table.

Read.

Take Notes.

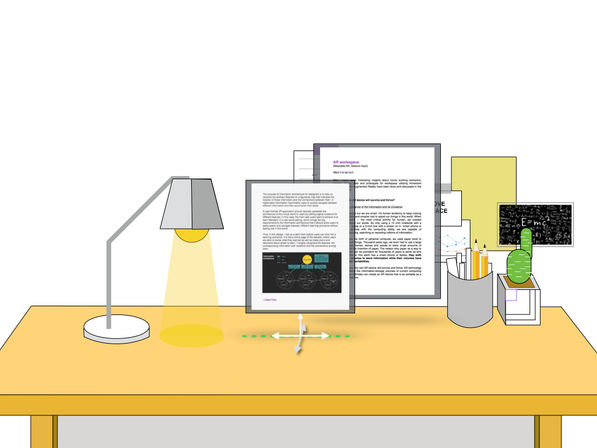

Preparation - Place your reading materials and your notebooks

Select the surface to place your reading materials.

Select the orientation of your reading materials' container.

Select a specific position for your container.

You are all set!

Preparation - Rearrange your virtual reading pages

Touching the shadows!

If you attached your digital pages on the table, you can touch to move the page!

Preparation - Rotate your virtual reading pages

Close one page among multiple pages

If you attached your digital pages on the table, you can long tap to close!

Reading - Turn Pages

Open system setting!

Touch the "forward" button.

If you attached your digital pages on the table, you can swipe to turn page!

Reading - Zoom in or out

Touch the "larger" button.

If you attached your digital pages on the table, you can scale the page by your fingers!

Reading - Larger Screen!

Touch the "bigger" button.

If you attached your digital pages on the table, you can Drag to make your screen bigger!

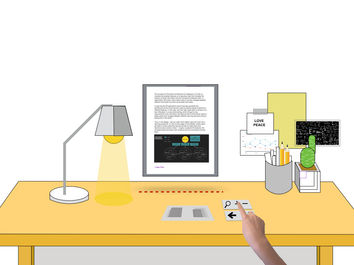

Take Notes

Put your digital notebook in the physical world!

Highlight the content that you are interested in!

You can either hand write the notes or type the notes!

Design Considerations

The biggest benefit of drawing these storyboard is that I can go through every detail of interaction and to determine this specific design is good or not under a specific context. Now I will discuss my understanding of the three design challenges I put at the very beginning. I’ll give my solutions and leave out some meaningful research questions to think around later.

①Context-based interaction design: remote control or direct touch.

Which interaction methods we used should fully consider the user’s comfort. In the AR design where we blend the digital world with the physical one, unlike the touchscreen interaction design, AR application design requires diverse types of user’s movements including touch, type, mouse-selection, voice input, arm movement or even walk around. Improperly using the interaction method under a given situation may entail a high risk of usability problems in term of Learnability, Efficiency, Memorability, Errors, and Satisfaction, according to Nielsen usability principles.

Before we can reasonably make a choice across these interaction methods, we need to first picture a specific user scenario which takes Human dimensions in workspaces, anthropometry, and ergonomics into consideration.

The following four factors matter (refer to Google IO 2018 AR design):

-

The users’ environment: table scale or room scale or world scale;

-

The users’ movement: move around or sit or stand still;

-

Task characteristic: high-frequency or low. roughly selection or delicate operations (typing or writing or continuous selections). continuous or discrete.

-

The position of the virtual AR screen. In the AR application, we enable users to create the virtual screen as they want. Our application should provide context-based interactions with regard to different positions of these virtual screens. Attached on the physical surface or In the Mid-air? Vertical or Horizontal? Close to the user or Far from the user?

There are some principles from ergonomics could be helpful for my selection of interactions:

-

For delicate operations gaze + gesture is tiring!!!/ typing is more effective

-

Close to user: touch is better

-

Far from user: laser pen or remoter

Optional Interaction methods: Remote control which requires a control panel. Direct Touch. Selection Device. Handwriting. Typing. Voice Input. Gesture. Gaze.

In this case, users are allowed to read while taking notes. So the specific using scenarios would be:

This user is sitting in front of the table and doing some high-frequency interactive tasks and occasional low-frequency simple tasks. He/she can put the virtual screen in the mid-air, on the table or on the wall which might be in front of him/her. Let’s see how I make the trade-off under specific user tasks.

Context — Highlight the sentences

Task characteristic: precious selection.

Context — Turn pages

Task characteristic: low-frequency simple operations

Context — Taking notes

Task characteristic: delicate operations (typing or writing or continuous selections).

Context — Arrange Multiple Page

Task characteristic: low-frequency + simple operations

Context — Close the Page

Task characteristic: low-frequency + simple operations

②Typing in AR

Digital keyboard or Physical keyboard?

"The conventional keyboard is actually pretty good at what it does."

Why We Can’t Quit the QWERTY Keyboard from medium

AR typing

Recognition of typing motions on AR typing interface

Masae Okada Japan Women's University, Tokyo, Japan

Masakazu Higuchi Saitama University, Saitama City, Japan

Takashi Komuro Saitama University, Saitama City, Japan

Kayo Ogawa Japan Women's University, Tokyo, Japan

Potential Research Questions

①AR typing

② Delicate and continuous selection, what affect users’ satisfaction? compare different device - traditional mouse? laser pen? gaze_gesture? voice?

③ Mid-air touch vs tangible interface touch?

Prototyping to Test

Similar Works